Generative AI generates Gell-Mann amnesia

Briefly stated, the Gell-Mann Amnesia effect is as follows. You open the newspaper to an article on some subject you know well. In Murray’s case, physics. In mine, show business. You read the article and see the journalist has absolutely no understanding of either the facts or the issues. Often, the article is so wrong it actually presents the story backward—reversing cause and effect. I call these the “wet streets cause rain” stories. Paper’s full of them.

… In any case, you read with exasperation or amusement the multiple errors in a story, and then turn the page to national or international affairs, and read as if the rest of the newspaper was somehow more accurate about Palestine than the baloney you just read. You turn the page, and forget what you know. That is the Gell-Mann Amnesia effect. I’d point out it does not operate in other arenas of life. In ordinary life, if somebody consistently exaggerates or lies to you, you soon discount everything they say. In court, there is the legal doctrine of falsus in uno, falsus in omnibus, which means untruthful in one part, untruthful in all. But when it comes to the media, we believe against evidence that it is probably worth our time to read other parts of the paper. When, in fact, it almost certainly isn’t. The only possible explanation for our behavior is amnesia. – Michael Crichton

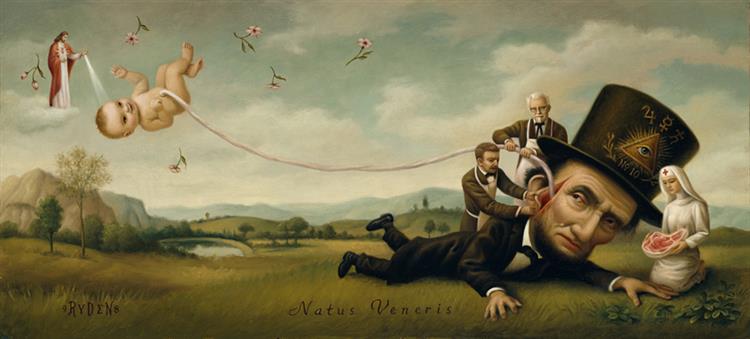

I remember the first aesthetically coherent image I saw from a generative AI program – probably DALLE-2. Or rather, I remember the impression it made upon me: I immediately thought “they1 used a picture from the artist who did the album art from that Red Hot Chili Peppers album – the one with Aeroplane”

That artist was Mark Ryden, a pop surrealist. It was clear that the generated image had drawn heavily from his style, to the extent that it was apparent to a viewer who had last seen his work in a music zine during the Clinton years.

I’ve had that same feeling several times since then, with different generative AI programs and outputs – indeed you can summon the feeling at will by loading a LoRA in Stable Diffusion.

It occurs to me that even when I don’t get this feeling of vague recognition, some other perceiver might. Generative AI invokes a particular type of Gell-Mann amnesia: even if I am not experiencing a faint glimmer of recognition, it is probable that someone else is.

-

I have always had the feeling that generative AI is an API to a massive set of collection and curation decisions made by a group of vested individuals. Treating its products as merely the inevitable outcomes of an algorithm seems inaccurate, if not obfuscatory. ↩︎